How To Stop Infinite Loops In Bidirectional Syncs

Scenario

You’re excited to put a sync in place between the Salesforce Account object and the Customer table in your backend accounting software. You build a Link (Link A) that listens for changes on the Account object and sends them to the accounting system, and you build a Link (Link B) that listens for changes on Customer in the accounting system and sends them to Salesforce. Badda boom, bidirectional sync!

But there’s a problem: you create a new Account called Acme in Salesforce at 2:00pm, and Link A picks it up and writes it to the accounting system at 2:30pm. Link B runs at 2:45pm and sees a recently-edited Customer record, so it sends it off to Salesforce. Link A runs again, and sees that Acme was updated at 2:45pm, and since the last time Link A ran was 2:30pm, it’s a fresh change! Better send that highly-valuable new info over to the accounting system. And so on, and so on, and so on…we’ve just created a vampire record that will bounce back and forth between these systems until the end of time.

This is a side effect of doing bidirectional delta syncs (between any integrated systems, this isn’t Valence-specific), and it’s not uncommon. Unfortunately, the more you poke at it and investigate the more complicated you realize it actually is. Let’s dig in.

Solutions

Below are some strategies for breaking the loop, and considerations, pros, and cons for each. There are many different ways you can tackle this problem, and that’s because there are many subtle nuances to the circumstances in which this problem exists. The right strategy changes depending on if you are doing scheduled Links or realtime Links. It matters if your record transformations are deterministic. It matters what your contention resolution needs are, and how you handle system of record.

The point is, take some time to carefully think about your unique circumstance you are solving for and consider potential edge cases and if your plan covers them. Some of the solutions presented below are just feature toggles, and some are less prescriptive and more about arming you with patterns and ideas.

LocalSalesforceAdapter configuration

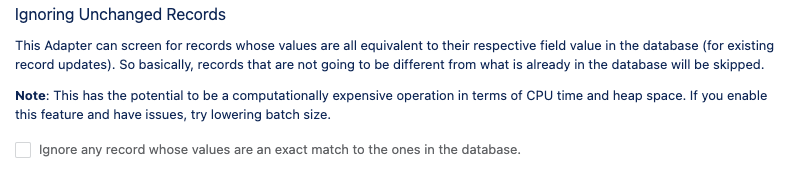

When using the LocalSalesforceAdapter as a target, there is a configuration option you can select right after you’ve chosen which field should be your upsert field:

This is your “easy button” for breaking the loop. If you turn this on, generally it’s all you’ll need to do and you can stop worrying about it.

Be aware that using this method is a little inefficient, as it will allow a record created in Salesforce to go to the external system, then come back into Salesforce before it is squashed, so that’s one extra hop. In the big picture it’s usually not a problem, just some extra inbound records that you don’t care about and will be marked as ignored.

Warning

This pattern will not work if your Link is not deterministic. 99% of Links are deterministic, meaning the same record with the same incoming field values will produce the same target record. However, as an example, there is one thing people like to have that will cause a Link to be non-deterministic: adding a timestamp for “last-synced”. If you are generating a timestamp in a Filter and writing it into Salesforce, then the same record will look different each time it arrives, and this pattern won’t work.

Valence Fingerprinting

There is a Fingerprinting feature you can enable in the Link Settings for any Link.

When this is active Valence will use hashes of each record and compare traveling records to past records for exact matches. If the exact same record is being delivered twice in a row, the record is blocked. Blocked records disappear from the batch they are in, are not delivered, and no record snapshot is generated. There are two exceptions: if a record is the same as it was before but last time it ran the record failed or was ignored, it is allowed to attempt delivery this time (in case things have changed and it would be a success this time).

Looking back at the scenario we started with, the Account would go through Link A to the external system, back through Link B, then get picked up by Link A but not delivered. So this pattern would involve one more hop than the previous one, but the ultimate result is the same.

This feature is similar to configuring the LocalSalesforceAdapter as described above, but differs in the following ways:

Records are blocked, not ignored (ignored records show up in Sync Events with their ignore reason)

Works for any Link going any direction (configuring LocalSalesforceAdapter only works for inbound to Salesforce, but of course breaking one side breaks the loop)

Warning

The same warning about deterministic records applies here as well (see the previous warning for more detail).

Record-based Marker

For remaining patterns including this one, we’re moving away from Valence features into customizations and strategies that you can take advantage of if the previous two options are not a good fit.

A record-based marker is some kind of identifier on the record itself than can be used to make a decision about whether it should be written into the target (or, also if it should be picked up and written back out).

The big advantage of a record-based marker is that it is durable. After the Link run ends we still have access to the marker. This means it’s available to any Link at any later point in time, meaning this pattern works for both scheduled and realtime Links.

The most common pattern here is some kind of “last touch” field with string values like “Quickbooks” and “Salesforce”. All Links writing into Salesforce from Quickbooks assign “Quickbooks” to this field, and all Links writing into Quickbooks assign “Salesforce” to this field. Then, you filter both Links to only pick up changed records that are from the local system (or filter one side, still breaks the loop at the cost of an extra hop).

This works well enough, but it can be a little fragile. You have to make sure that all the ways you can change a record normally (say in Salesforce if someone edits the field from its record page) mark the “last touch” field back to “Salesforce” (in this example) so that it can be picked up when you want it to be.

The easiest way to do this is in a before trigger on that SObject, but you have a challenge: how do you differentiate between the trigger firing because Valence wrote to the SObject, and all other trigger fires? Unfortunately there’s no way to tell normally, so we recommend the use of some kind of static flag that you set from a Filter and then check in your Apex trigger to let yourself know this write is a result of a Valence Link.

Tip

If you have automations that fire and create/update other records than the arriving ones, you may want to listen to those and allow them to be emitted back out by Valence, so think carefully about how you design your flag.

Context-based Marker

This is similar to the record-based marker described above, with some key differences. With a context-based marker the implementation is a little simpler, but it only works for realtime Links.

You have a static flag somewhere like before (can be as simple as a static Boolean somewhere), but you don’t mark up the records themselves.

Let’s say you have Link A that writes into the Contact object, and Link B that listens to the Contact object using a Flow or Apex trigger.

Link A runs, and some records are on their way inbound to the Contact object. Set the flag with a Filter during the run. Ding! Alert! Valence run in progress.

Then, when the Flow or Apex trigger that drives Link B reacts to the records being written to the database, check the flag. If it’s set, ignore these records, don’t reflect them outbound if they’ve just arrived. If false, send them outbound, they didn’t come from this execution context.

The reason this doesn’t work for scheduled Links is that once your current execution context ends you lose the flag, so it is only effective if you’re teeing up records to go outbound in the same context they are arriving inbound.

Tip

If you have automations that fire and create/update other records than the arriving ones, you may want to listen to those and allow them to be emitted back out by Valence, so think carefully about how you design your flag.

Other Bidirectional Sync Considerations

Since we’re already talking through the ramifications of syncing two tables in both directions, let’s take the opportunity to break down a few more gotchas and words to the wise.

Data Enrichment

Generally we think that a record that just arrived in a system should not be sent right back out the other direction. However, there’s an exception to this: if a record is transformed upon arrival, you may want to allow it to reflect right back out.

Here are some potential reasons:

Some default values are set in some of the fields and those values are worth sending back to the original system

Identifiers of some kind are generated on this new record

Sub-records or related records are generated

In these scenarios, you actually want the extra hop so that both systems end up with these enriched fields. Using a pattern like LocalSalesforceAdapter configuration serves you well here.

Duplicates

It is common to set up a bidirectional sync and then start getting duplicates. This is because you’re typically writing to each system with the unique identifier from that system. A new record arrives in a system and is created there, then the record is sent back the other direction carrying its new identifier, but because it’s a new identifier the upsert back the other direction doesn’t find it and inserts a record. So you get two records in the original system, one record in the other system, and the very first record is orphaned (and further edits to it will spawn more duplicates).

The fix here is straightforward. Almost all APIs return new identifiers in response to record creation, so the target Adapter delivering the records needs to do a “writeback”. A writeback is a small record, usually just a pair of identifiers, sent back to the original system so that the new identifier is correctly paired with the original source record.

Contention

If two systems can both edit the same record, how do you handle the scenario where both systems have each independently changed the record before a sync occurs? Or, even worse, each system fires their change and both systems lose data.

This can be enormously tricky to completely solve, so most implementations settle for a “good enough” plan. The most common is to just let everything write when it arrives, and hope that syncs and edits don’t line up in a way that causes data loss. This is usually fine, honestly, if your syncs are pretty frequent and your edits infrequent (at least edits to the same record).

A slightly more refined approach is an “ignore if newer” behavior. You pass the most recent edit timestamp for a record in the source system as part of the payload, and then check the most recent edit in the target system, and whichever is newer is the winner (resulting in write or ignore). It’s not too hard to set up a Filter that does this evaluation and uses the ignore() method on RecordInFlight.

However, this approach treats newer as better, but it’s just as likely that both edits are worth preserving. A more accurate approach would be to merge the records together and preserve both edits. As you can imagine merging records can be quite a nuanced operation…best of luck! You’ve got this.

One more contention resolution strategy is to leverage a “system of record” style of thinking, where one system “owns” a record and that system always wins contention.

System of Record

When we introduce the idea of system of record, we are introducing primacy between our two systems. One system is the golden record, the truth, and the other system should match and if it doesn’t the golden record is the correct one.

Most businesses identify a system of record for any given object or table. Maybe your Contacts treat Salesforce as the System of Record, but your Accounts treat your backoffice accounting system as the system of record.

Declaring a system of record for an object can greatly simplify your strategy for resolving contention.

Sometimes, system of record gets a little more granular. Here are some patterns:

Object-level System of Record: each object is “owned” by one of the participating systems, and any contention favors that system.

Record-level System of Record: the first system that creates a record “owns” it, and each system holds records that are owned by each system. The system that owns a particular record wins during contention resolution.

Field-level System of Record: some fields on a record are owned by another system. This is quite common when you are using other tools, like tracking data, to enrich your records. Maybe 10 fields on Account belong to your tracking tool which would win contention only on edits to those 10 fields.

System of record can tie back to everything we’ve discussed in this document.

One of our early customers was bidirectionally syncing a table in Salesforce with their backoffice system, and used field-level ownership to resolve contention. In addition, if a record arriving in Salesforce only changed fields owned by the source system, no outbound sync record would be sent. So not only did they use it for contention but also for blocking infinite loops.

You could also do the reverse, where you only pick up records to send if certain fields have changed, not just any change.